Top Open-Source Prompt Management Platforms 2026

Top Open-Source Prompt Management Platforms 2026

Top Open-Source Prompt Management Platforms 2026

Discover which open-source prompt management platform is right for your team. In-depth comparison of Agenta, Langfuse, Phoenix, Latitude, and Pezzo with features, licensing, and code examples.

Discover which open-source prompt management platform is right for your team. In-depth comparison of Agenta, Langfuse, Phoenix, Latitude, and Pezzo with features, licensing, and code examples.

Discover which open-source prompt management platform is right for your team. In-depth comparison of Agenta, Langfuse, Phoenix, Latitude, and Pezzo with features, licensing, and code examples.

Dec 17, 2025

Dec 17, 2025

-

10 minutes

10 minutes

Ship reliable AI apps faster

Agenta is the open-source LLMOps platform: prompt management, evals, and LLM observability all in one place.

Top Open-Source Prompt Management Platforms: A Deep Dive

Building reliable LLM applications is hard. Teams often struggle with a chaotic development process. Prompts are scattered in code, spreadsheets, or Slack messages. This makes collaboration between developers and subject matter experts difficult. It also makes it impossible to track changes and ensure quality in production.

Prompt management platforms solve this problem. They provide a central place to design, version, and deploy prompts. This article compares the top open-source prompt management platforms. We will look at their features, how they work, and what makes them different. Our goal is to help you choose the right platform for your team.

The Contenders

We will compare the following open-source platforms:

Agenta: A full LLMOps platform with a strong focus on collaboration.

Langfuse: An LLM engineering platform with deep observability features.

Arize Phoenix: An AI observability platform with prompt engineering capabilities.

Latitude: An open-source platform for both AI agents and prompt engineering.

Pezzo: A developer-first LLMOps platform focused on cloud-native delivery.

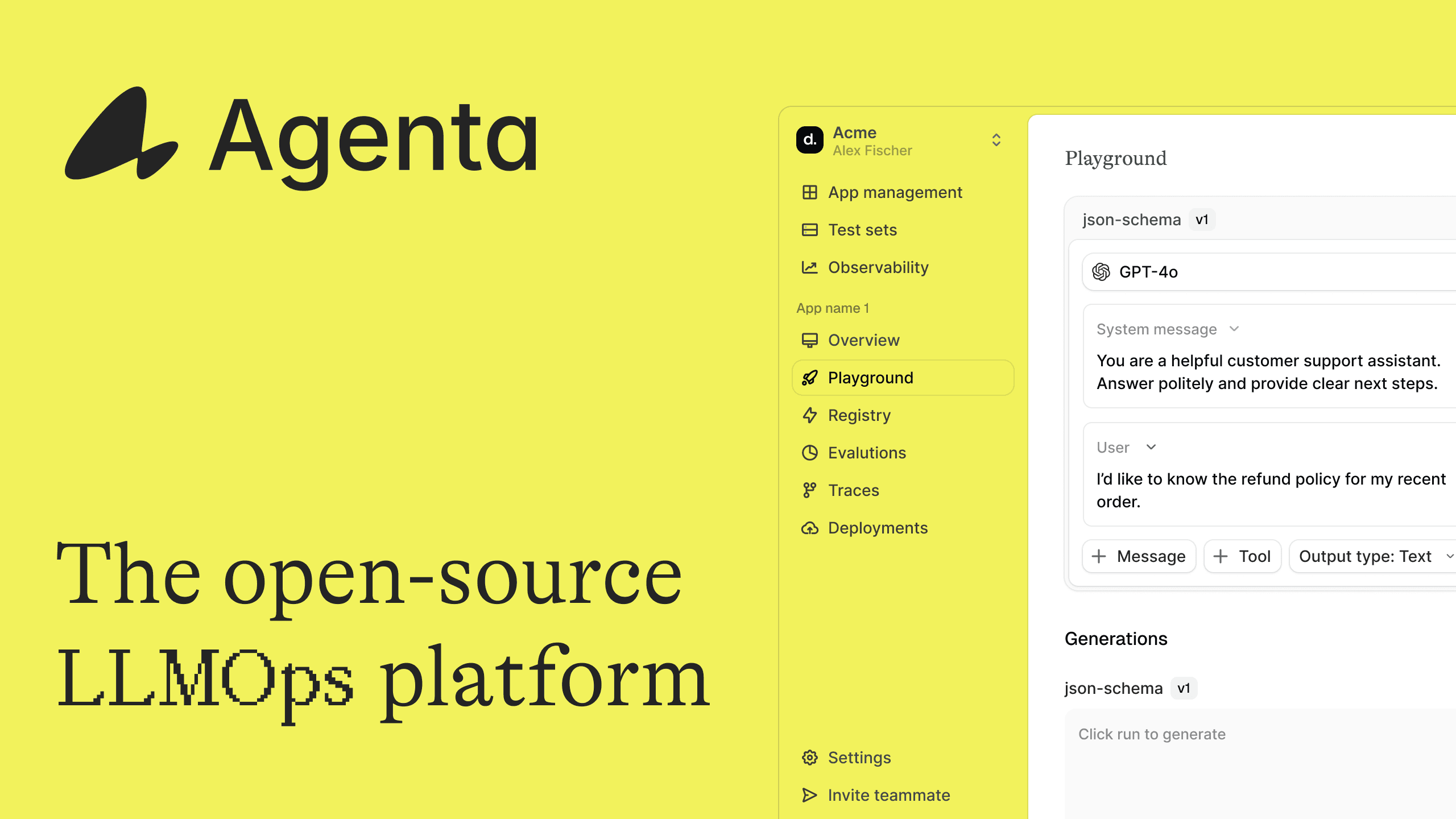

Agenta

Agenta is an open-source LLMOps platform that covers the entire development lifecycle. It provides tools for prompt management, evaluation, and observability. Agenta’s main goal is to enable seamless collaboration between developers and non-developers.

Key Features

Git-like Versioning: Agenta uses a versioning model similar to Git. You can create multiple variants (branches) of a prompt, each with its own commit history. This allows for parallel experimentation without affecting production.

Environments: You can deploy different prompt variants to different environments, such as development, staging, and production. This provides a safe and organized way to manage deployments.

Collaboration First: Agenta is designed for collaboration. Subject matter experts can use the UI to edit prompts, run evaluations, and even deploy changes without writing any code. This empowers the entire team to contribute to the quality of the LLM application.

Full Lifecycle Support: Agenta is not just a prompt management tool. It provides a complete LLMOps solution with integrated evaluation and observability. This means you can manage your prompts, test them systematically, and monitor their performance in production, all in one place.

Permissive License: Agenta is licensed under the MIT license. This means you can use it, modify it, and even sell it without any restrictions.

How it Works

Agenta’s Python SDK allows you to manage prompts programmatically. Here is an example of how to create a new prompt variant, deploy it, and fetch it in your application.

import agenta as ag from pydantic import BaseModel # Define the prompt configuration class Config(BaseModel): prompt: ag.PromptTemplate config = Config( prompt=ag.PromptTemplate( messages=[ ag.Message(role="system", content="You are a helpful assistant."), ag.Message(role="user", content="Explain {{topic}} in simple terms."), ], llm_config=ag.ModelConfig( model="gpt-3.5-turbo", temperature=0.7, ) ) ) # Create a new variant variant = ag.VariantManager.create( parameters=config.model_dump(), app_slug="my-app", variant_slug="new-variant" ) # Deploy the variant to production ag.DeploymentManager.deploy( app_slug="my-app", variant_slug="new-variant", environment_slug="production", ) # Fetch the configuration from the registry prod_config = ag.ConfigManager.get_from_registry( app_slug="my-app", environment_slug="production" )

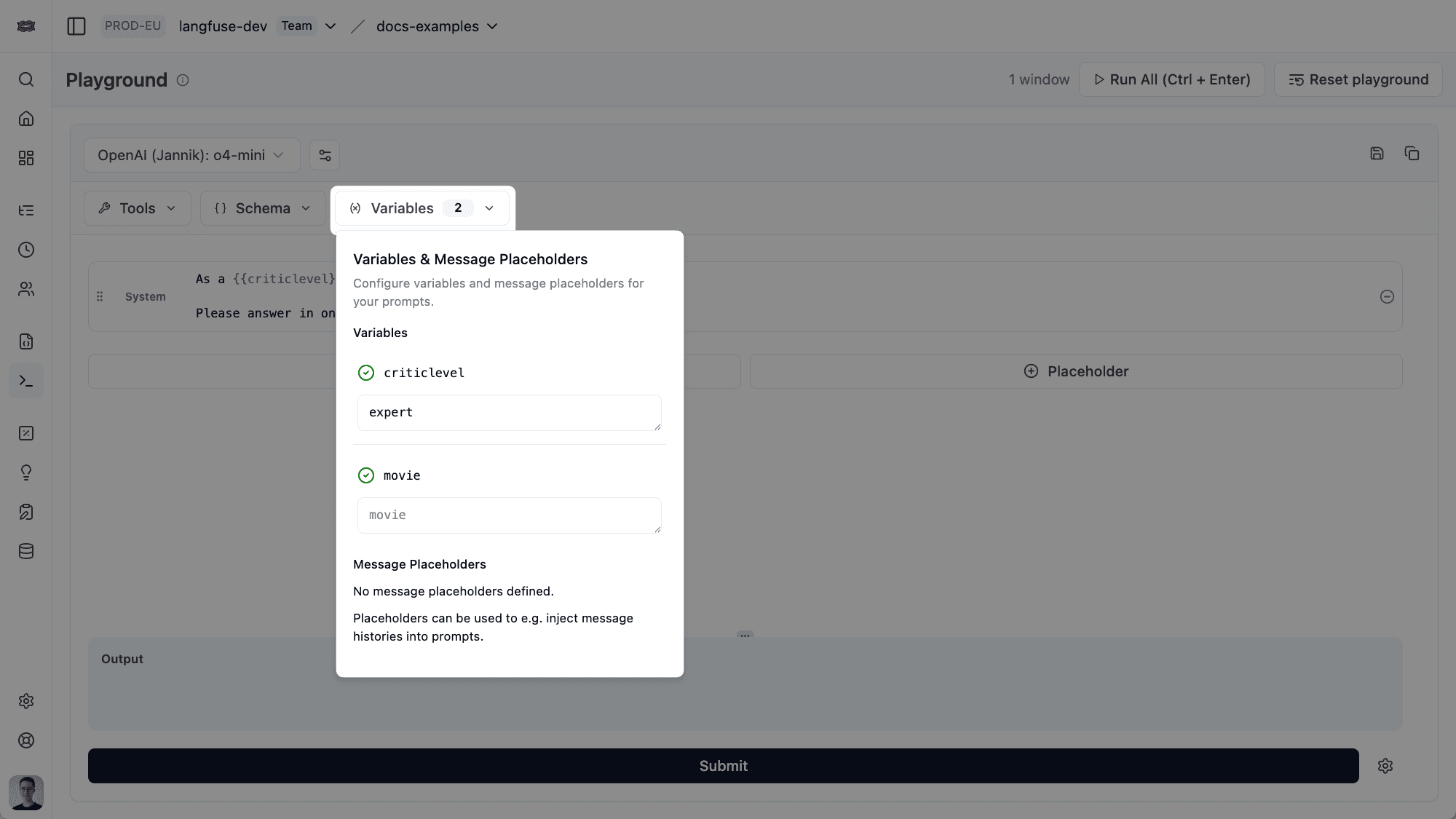

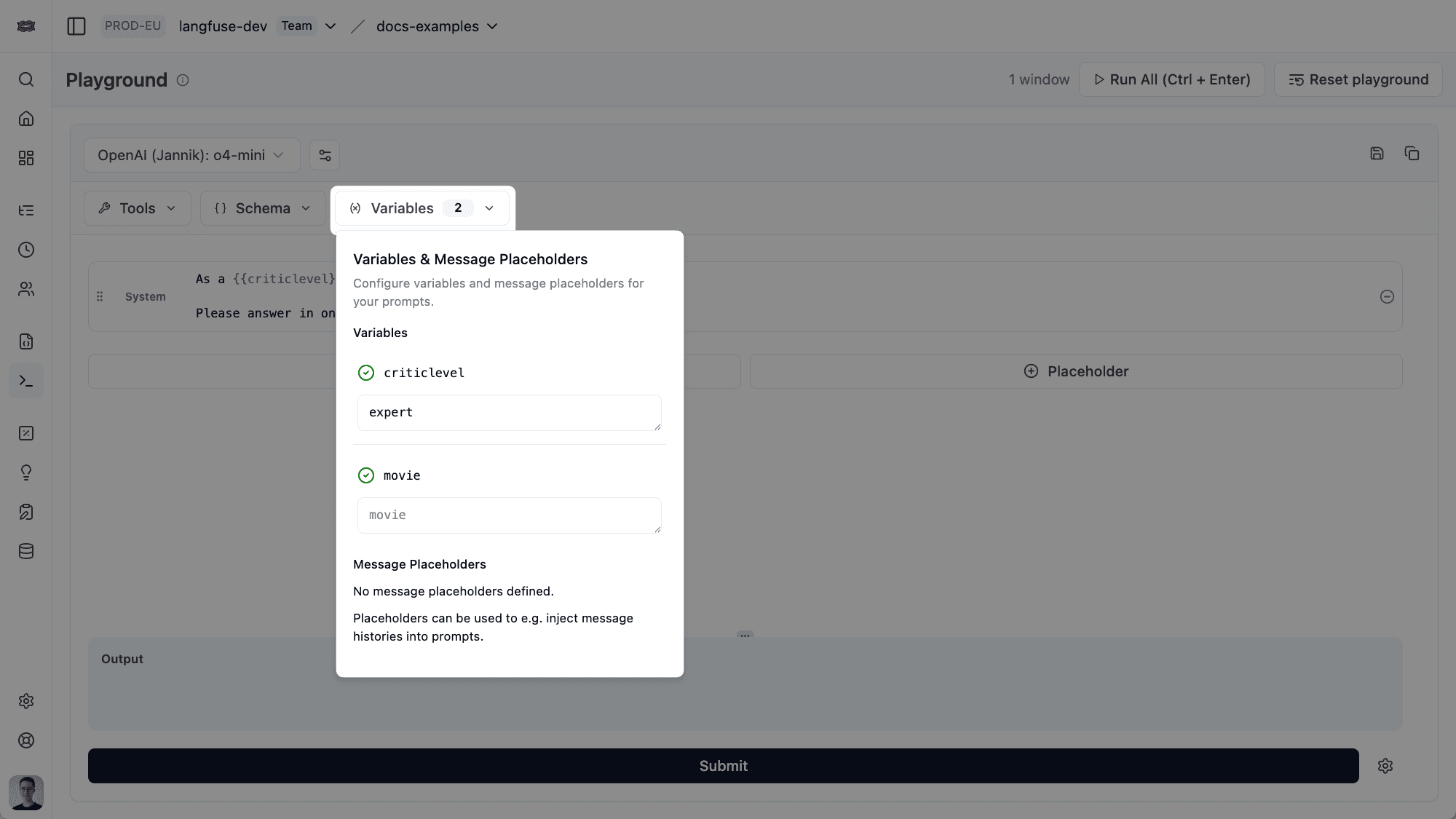

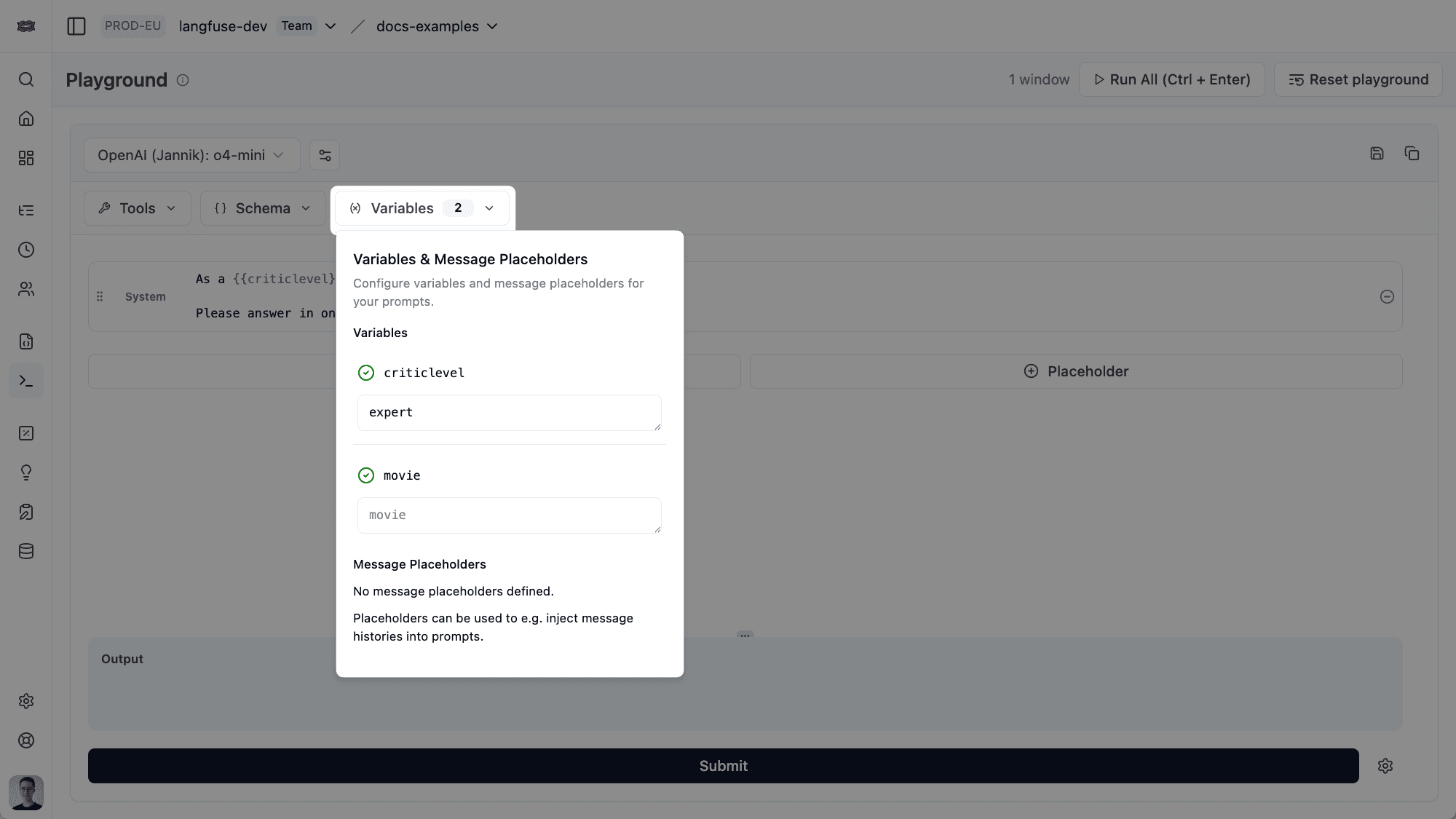

Langfuse

Langfuse is an open-source LLM engineering platform. It provides tools for tracing, prompt management, and evaluations. Langfuse has a strong focus on observability and developer experience.

Key Features

Simple Versioning: Langfuse uses a linear versioning system. Each prompt has a name and a version number. You can use labels like "production" to manage deployments.

UI for Editing: Langfuse provides a user interface for editing and managing prompts. This decouples prompts from the application code.

Strong Observability: Langfuse’s main strength is its tracing and observability features. It provides detailed insights into the performance and cost of your LLM application.

MIT License: Langfuse is also licensed under the MIT license, making it a great choice for commercial projects.

How it Works

Langfuse provides a Python SDK to interact with prompts. Here is how you can create and use a prompt.

from langfuse import Langfuse langfuse = Langfuse() # Create a new prompt prompt = langfuse.create_prompt( name="my-prompt", prompt="Translate the following to French: {{text}}", is_active=True ) # Get the production version of the prompt prod_prompt = langfuse.get_prompt("my-prompt", version="production")

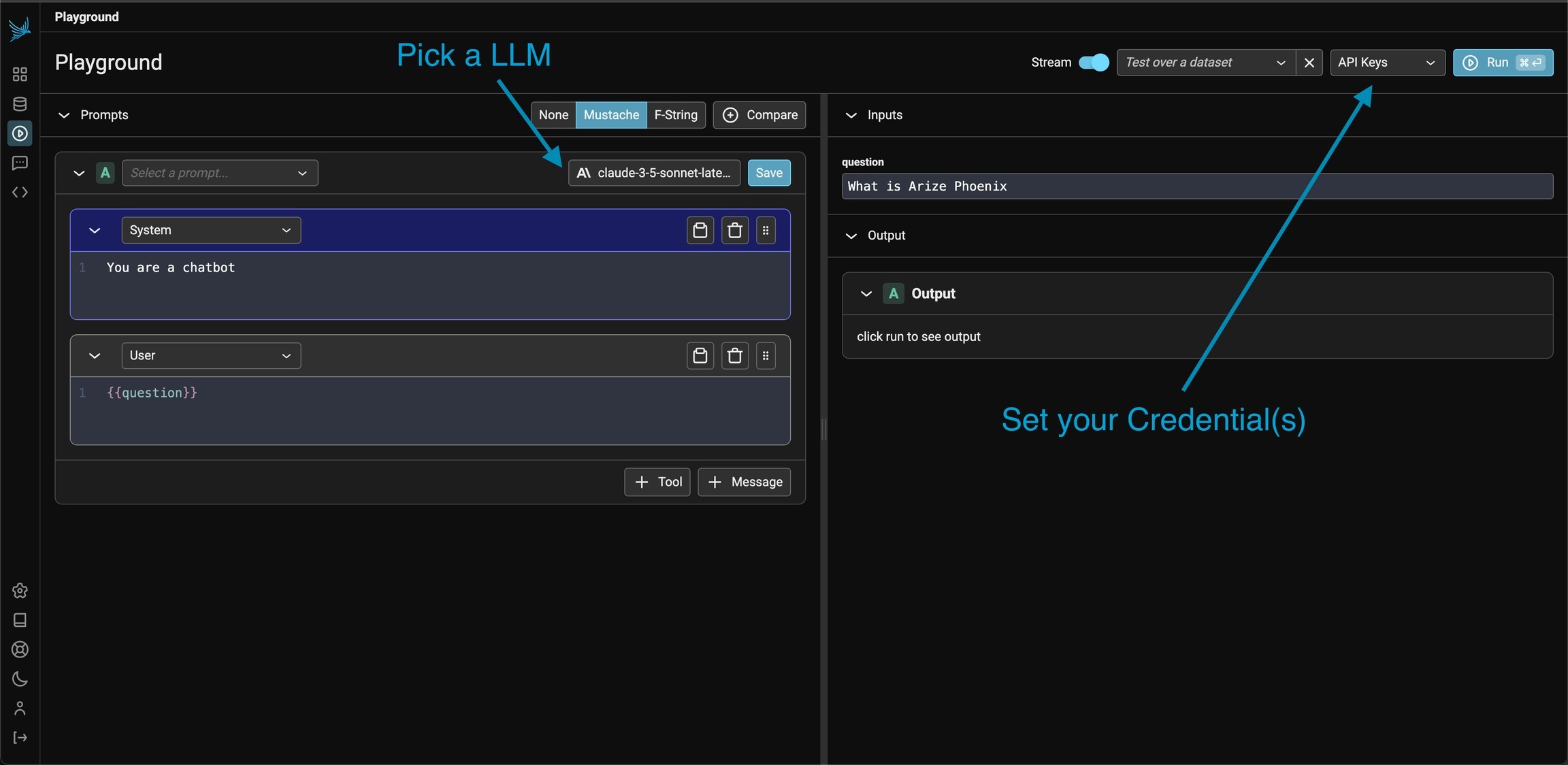

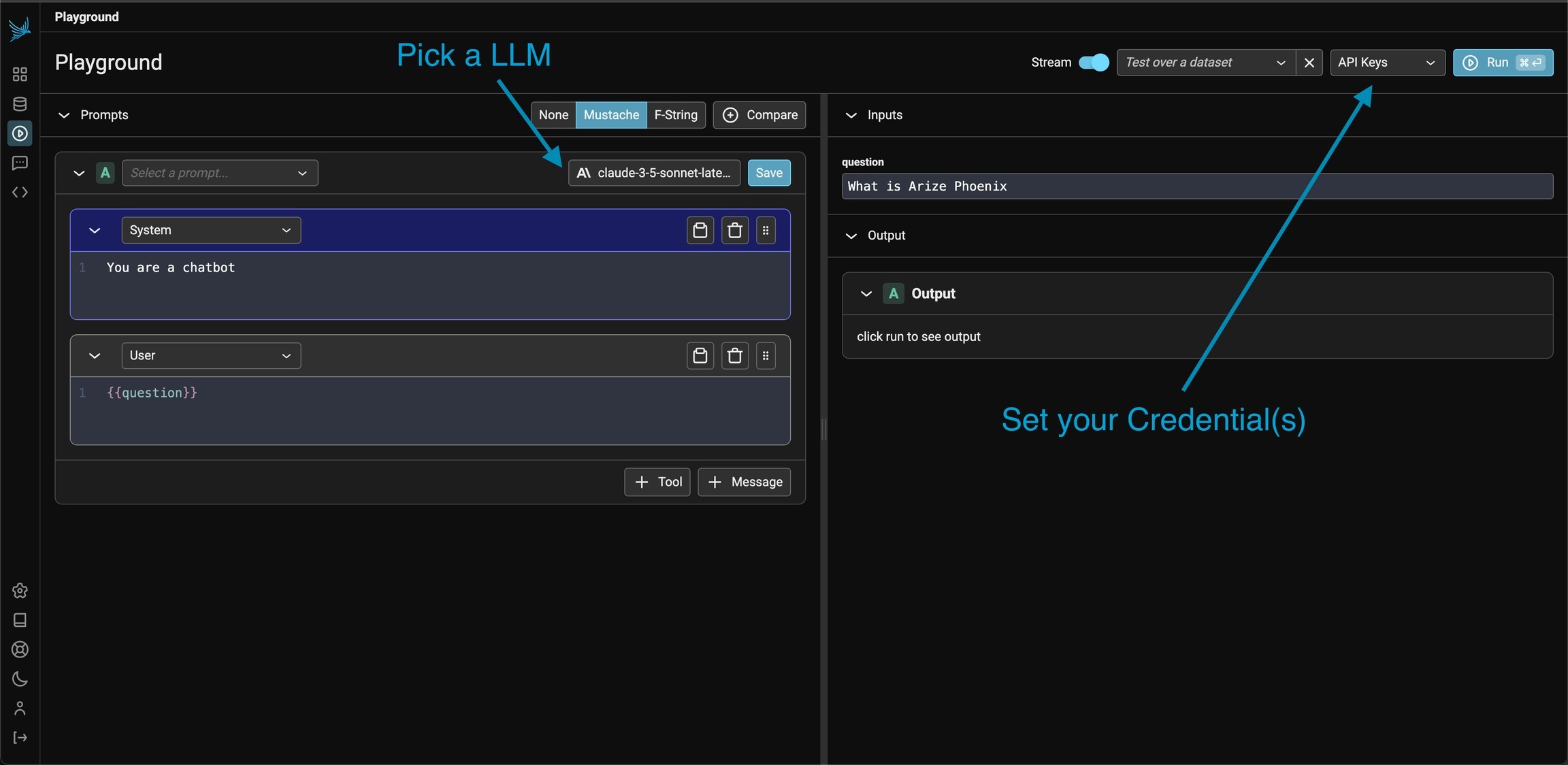

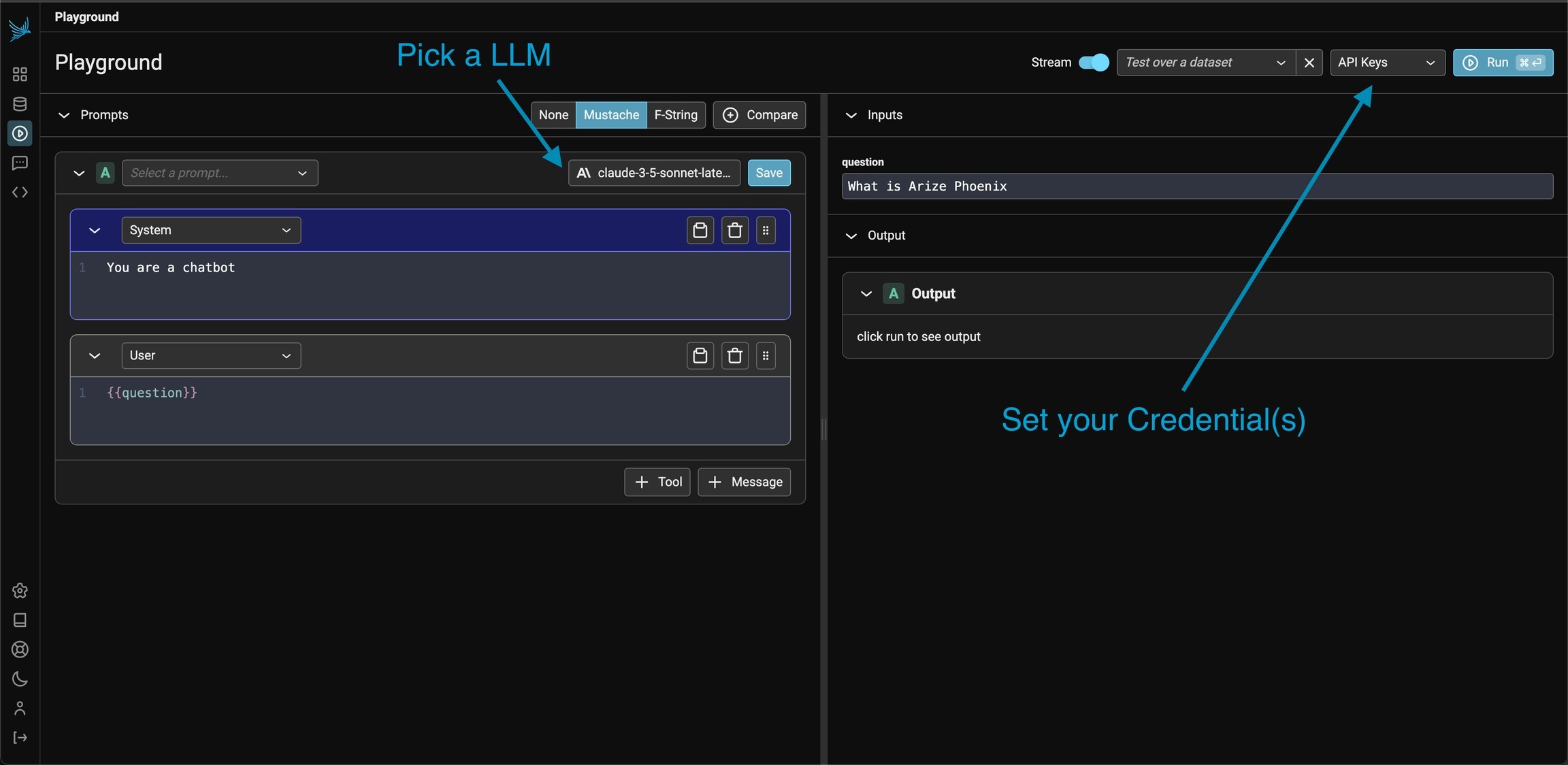

Arize Phoenix

Phoenix is an AI observability platform from Arize. It provides tools for tracing, evaluation, and prompt engineering. Phoenix is built on OpenTelemetry, which makes it highly extensible.

Key Features

Prompt Learning: Phoenix has a unique feature called Prompt Learning that automatically optimizes prompts based on performance.

Strong Evaluation: Phoenix provides powerful tools for evaluating prompts, including LLM-based evaluations and human feedback.

OpenTelemetry Native: Phoenix is built on OpenTelemetry, which is a standard for observability.

How it Works

Phoenix uses a Python SDK for interacting with prompts. The workflow is focused on evaluation and experimentation.

import phoenix as px # Log a prompt and its response with px.trace.span("my-prompt") as span: # Your LLM call here response = llm.complete(prompt="...", temperature=0.7) span.set_attribute("llm.response", response)

Important Note on Licensing: Arize Phoenix is licensed under the Elastic License 2.0. This is a non-copyleft license that is not approved by the Open Source Initiative (OSI). It has restrictions on providing the software as a managed service. This makes it less flexible than MIT or Apache 2.0 licensed alternatives.

Latitude

Latitude is an open-source platform for both AI agents and prompt engineering. It has a broader scope than just prompt management, with a strong focus on building and deploying AI agents.

Key Features

Agent-Centric: Latitude is designed for building AI agents, not just managing prompts. It provides tools for creating complex workflows and integrating with external tools.

Extensive Integrations: Latitude has over 2,500 integrations with other tools and services.

PromptL: Latitude has its own custom language for defining prompts called PromptL.

How it Works

Latitude provides a TypeScript SDK for running prompts.

import { Latitude } from '@latitude-data/sdk' const sdk = new Latitude(process.env.LATITUDE_API_KEY, { projectId: Number(process.env.PROJECT_ID), versionUuid: 'live', }) const result = await sdk.prompts.run('my-prompt', { parameters: { product_name: 'Laptop' }, })

Important Note on Licensing: Latitude is licensed under the LGPL-3.0. This is a copyleft license, which means that if you modify the source code, you must make your changes available under the same license. This can be a problem for commercial projects.

Pezzo

Pezzo is a developer-first, cloud-native LLMOps platform. It focuses on streamlining prompt design, version management, and instant delivery.

Key Features

Cloud-Native: Pezzo is designed to be deployed in a cloud-native environment.

Cost Optimization: Pezzo claims to save up to 90% on costs and latency through caching and other optimizations.

Apache 2.0 License: Pezzo is licensed under the Apache 2.0 license, which is a permissive open-source license.

How it Works

Pezzo provides a Node.js/TypeScript client for consuming prompts.

import { Pezzo, PezzoOpenAI } from "@pezzo/client"; const pezzo = new Pezzo({ apiKey: "<Your Pezzo API key>", projectId: "<Your Pezzo project ID>", environment: "Production", }); const openai = new PezzoOpenAI(pezzo); const prompt = await pezzo.getPrompt("MyPrompt"); const response = await openai.chat.completions.create(prompt, { variables: { topic: "AI" }, });

Comparison

Feature | Agenta | Langfuse | Arize Phoenix | Latitude | Pezzo |

|---|---|---|---|---|---|

Primary Focus | Collaboration & Full Lifecycle | Observability & DevEx | AI Observability & Evaluation | AI Agents & Prompt Engineering | Cloud-Native Delivery & Cost |

Versioning | Git-like (Variants & Commits) | Linear (Versions & Labels) | Linear (Tags) | Version Control | Commit & Publish |

Collaboration | Excellent (UI for non-devs) | Good (UI for editing) | Fair (Developer-focused) | Good (Team workflows) | Fair (Developer-first) |

Evaluation | Integrated (Auto, Human, Online) | Integrated (LLM-as-judge) | Excellent (Prompt Learning) | Integrated (Built-in, HITL) | Limited |

License | MIT | MIT | Elastic License 2.0 (Non-OSI) | LGPL-3.0 (Copyleft) | Apache 2.0 |

Why Agenta is the Best Choice

While all these platforms offer valuable features, Agenta stands out for several reasons:

True Open-Source and Permissive: Agenta is licensed under the MIT license, which gives you complete freedom. You can use it, modify it, and build commercial products on top of it without any restrictions. This is a major advantage over Arize Phoenix and Latitude, which have more restrictive licenses.

Designed for the Whole Team: Agenta is the only platform that is explicitly designed for collaboration between developers and non-developers. The user-friendly UI empowers product managers and subject matter experts to contribute directly to the quality of the LLM application. This leads to faster iteration cycles and better results.

Complete LLMOps Lifecycle: Agenta provides an integrated solution for the entire LLM development lifecycle. You do not need to stitch together multiple tools for prompt management, evaluation, and observability. This simplifies your workflow and reduces complexity.

Flexible and Powerful Versioning: Agenta’s Git-like versioning system is both powerful and flexible. It allows you to manage complex experiments and deployments with ease.

Conclusion

Choosing the right prompt management platform is crucial for building reliable LLM applications. While each platform has its strengths, Agenta offers a unique combination of features that make it the best choice for most teams. Its focus on collaboration, its complete LLMOps lifecycle support, and its permissive MIT license make it a powerful and flexible solution.

Ready to take control of your prompt management? Check out our Quick Start guide or get started with Agenta today.

References

Top Open-Source Prompt Management Platforms: A Deep Dive

Building reliable LLM applications is hard. Teams often struggle with a chaotic development process. Prompts are scattered in code, spreadsheets, or Slack messages. This makes collaboration between developers and subject matter experts difficult. It also makes it impossible to track changes and ensure quality in production.

Prompt management platforms solve this problem. They provide a central place to design, version, and deploy prompts. This article compares the top open-source prompt management platforms. We will look at their features, how they work, and what makes them different. Our goal is to help you choose the right platform for your team.

The Contenders

We will compare the following open-source platforms:

Agenta: A full LLMOps platform with a strong focus on collaboration.

Langfuse: An LLM engineering platform with deep observability features.

Arize Phoenix: An AI observability platform with prompt engineering capabilities.

Latitude: An open-source platform for both AI agents and prompt engineering.

Pezzo: A developer-first LLMOps platform focused on cloud-native delivery.

Agenta

Agenta is an open-source LLMOps platform that covers the entire development lifecycle. It provides tools for prompt management, evaluation, and observability. Agenta’s main goal is to enable seamless collaboration between developers and non-developers.

Key Features

Git-like Versioning: Agenta uses a versioning model similar to Git. You can create multiple variants (branches) of a prompt, each with its own commit history. This allows for parallel experimentation without affecting production.

Environments: You can deploy different prompt variants to different environments, such as development, staging, and production. This provides a safe and organized way to manage deployments.

Collaboration First: Agenta is designed for collaboration. Subject matter experts can use the UI to edit prompts, run evaluations, and even deploy changes without writing any code. This empowers the entire team to contribute to the quality of the LLM application.

Full Lifecycle Support: Agenta is not just a prompt management tool. It provides a complete LLMOps solution with integrated evaluation and observability. This means you can manage your prompts, test them systematically, and monitor their performance in production, all in one place.

Permissive License: Agenta is licensed under the MIT license. This means you can use it, modify it, and even sell it without any restrictions.

How it Works

Agenta’s Python SDK allows you to manage prompts programmatically. Here is an example of how to create a new prompt variant, deploy it, and fetch it in your application.

import agenta as ag from pydantic import BaseModel # Define the prompt configuration class Config(BaseModel): prompt: ag.PromptTemplate config = Config( prompt=ag.PromptTemplate( messages=[ ag.Message(role="system", content="You are a helpful assistant."), ag.Message(role="user", content="Explain {{topic}} in simple terms."), ], llm_config=ag.ModelConfig( model="gpt-3.5-turbo", temperature=0.7, ) ) ) # Create a new variant variant = ag.VariantManager.create( parameters=config.model_dump(), app_slug="my-app", variant_slug="new-variant" ) # Deploy the variant to production ag.DeploymentManager.deploy( app_slug="my-app", variant_slug="new-variant", environment_slug="production", ) # Fetch the configuration from the registry prod_config = ag.ConfigManager.get_from_registry( app_slug="my-app", environment_slug="production" )

Langfuse

Langfuse is an open-source LLM engineering platform. It provides tools for tracing, prompt management, and evaluations. Langfuse has a strong focus on observability and developer experience.

Key Features

Simple Versioning: Langfuse uses a linear versioning system. Each prompt has a name and a version number. You can use labels like "production" to manage deployments.

UI for Editing: Langfuse provides a user interface for editing and managing prompts. This decouples prompts from the application code.

Strong Observability: Langfuse’s main strength is its tracing and observability features. It provides detailed insights into the performance and cost of your LLM application.

MIT License: Langfuse is also licensed under the MIT license, making it a great choice for commercial projects.

How it Works

Langfuse provides a Python SDK to interact with prompts. Here is how you can create and use a prompt.

from langfuse import Langfuse langfuse = Langfuse() # Create a new prompt prompt = langfuse.create_prompt( name="my-prompt", prompt="Translate the following to French: {{text}}", is_active=True ) # Get the production version of the prompt prod_prompt = langfuse.get_prompt("my-prompt", version="production")

Arize Phoenix

Phoenix is an AI observability platform from Arize. It provides tools for tracing, evaluation, and prompt engineering. Phoenix is built on OpenTelemetry, which makes it highly extensible.

Key Features

Prompt Learning: Phoenix has a unique feature called Prompt Learning that automatically optimizes prompts based on performance.

Strong Evaluation: Phoenix provides powerful tools for evaluating prompts, including LLM-based evaluations and human feedback.

OpenTelemetry Native: Phoenix is built on OpenTelemetry, which is a standard for observability.

How it Works

Phoenix uses a Python SDK for interacting with prompts. The workflow is focused on evaluation and experimentation.

import phoenix as px # Log a prompt and its response with px.trace.span("my-prompt") as span: # Your LLM call here response = llm.complete(prompt="...", temperature=0.7) span.set_attribute("llm.response", response)

Important Note on Licensing: Arize Phoenix is licensed under the Elastic License 2.0. This is a non-copyleft license that is not approved by the Open Source Initiative (OSI). It has restrictions on providing the software as a managed service. This makes it less flexible than MIT or Apache 2.0 licensed alternatives.

Latitude

Latitude is an open-source platform for both AI agents and prompt engineering. It has a broader scope than just prompt management, with a strong focus on building and deploying AI agents.

Key Features

Agent-Centric: Latitude is designed for building AI agents, not just managing prompts. It provides tools for creating complex workflows and integrating with external tools.

Extensive Integrations: Latitude has over 2,500 integrations with other tools and services.

PromptL: Latitude has its own custom language for defining prompts called PromptL.

How it Works

Latitude provides a TypeScript SDK for running prompts.

import { Latitude } from '@latitude-data/sdk' const sdk = new Latitude(process.env.LATITUDE_API_KEY, { projectId: Number(process.env.PROJECT_ID), versionUuid: 'live', }) const result = await sdk.prompts.run('my-prompt', { parameters: { product_name: 'Laptop' }, })

Important Note on Licensing: Latitude is licensed under the LGPL-3.0. This is a copyleft license, which means that if you modify the source code, you must make your changes available under the same license. This can be a problem for commercial projects.

Pezzo

Pezzo is a developer-first, cloud-native LLMOps platform. It focuses on streamlining prompt design, version management, and instant delivery.

Key Features

Cloud-Native: Pezzo is designed to be deployed in a cloud-native environment.

Cost Optimization: Pezzo claims to save up to 90% on costs and latency through caching and other optimizations.

Apache 2.0 License: Pezzo is licensed under the Apache 2.0 license, which is a permissive open-source license.

How it Works

Pezzo provides a Node.js/TypeScript client for consuming prompts.

import { Pezzo, PezzoOpenAI } from "@pezzo/client"; const pezzo = new Pezzo({ apiKey: "<Your Pezzo API key>", projectId: "<Your Pezzo project ID>", environment: "Production", }); const openai = new PezzoOpenAI(pezzo); const prompt = await pezzo.getPrompt("MyPrompt"); const response = await openai.chat.completions.create(prompt, { variables: { topic: "AI" }, });

Comparison

Feature | Agenta | Langfuse | Arize Phoenix | Latitude | Pezzo |

|---|---|---|---|---|---|

Primary Focus | Collaboration & Full Lifecycle | Observability & DevEx | AI Observability & Evaluation | AI Agents & Prompt Engineering | Cloud-Native Delivery & Cost |

Versioning | Git-like (Variants & Commits) | Linear (Versions & Labels) | Linear (Tags) | Version Control | Commit & Publish |

Collaboration | Excellent (UI for non-devs) | Good (UI for editing) | Fair (Developer-focused) | Good (Team workflows) | Fair (Developer-first) |

Evaluation | Integrated (Auto, Human, Online) | Integrated (LLM-as-judge) | Excellent (Prompt Learning) | Integrated (Built-in, HITL) | Limited |

License | MIT | MIT | Elastic License 2.0 (Non-OSI) | LGPL-3.0 (Copyleft) | Apache 2.0 |

Why Agenta is the Best Choice

While all these platforms offer valuable features, Agenta stands out for several reasons:

True Open-Source and Permissive: Agenta is licensed under the MIT license, which gives you complete freedom. You can use it, modify it, and build commercial products on top of it without any restrictions. This is a major advantage over Arize Phoenix and Latitude, which have more restrictive licenses.

Designed for the Whole Team: Agenta is the only platform that is explicitly designed for collaboration between developers and non-developers. The user-friendly UI empowers product managers and subject matter experts to contribute directly to the quality of the LLM application. This leads to faster iteration cycles and better results.

Complete LLMOps Lifecycle: Agenta provides an integrated solution for the entire LLM development lifecycle. You do not need to stitch together multiple tools for prompt management, evaluation, and observability. This simplifies your workflow and reduces complexity.

Flexible and Powerful Versioning: Agenta’s Git-like versioning system is both powerful and flexible. It allows you to manage complex experiments and deployments with ease.

Conclusion

Choosing the right prompt management platform is crucial for building reliable LLM applications. While each platform has its strengths, Agenta offers a unique combination of features that make it the best choice for most teams. Its focus on collaboration, its complete LLMOps lifecycle support, and its permissive MIT license make it a powerful and flexible solution.

Ready to take control of your prompt management? Check out our Quick Start guide or get started with Agenta today.

References

Top Open-Source Prompt Management Platforms: A Deep Dive

Building reliable LLM applications is hard. Teams often struggle with a chaotic development process. Prompts are scattered in code, spreadsheets, or Slack messages. This makes collaboration between developers and subject matter experts difficult. It also makes it impossible to track changes and ensure quality in production.

Prompt management platforms solve this problem. They provide a central place to design, version, and deploy prompts. This article compares the top open-source prompt management platforms. We will look at their features, how they work, and what makes them different. Our goal is to help you choose the right platform for your team.

The Contenders

We will compare the following open-source platforms:

Agenta: A full LLMOps platform with a strong focus on collaboration.

Langfuse: An LLM engineering platform with deep observability features.

Arize Phoenix: An AI observability platform with prompt engineering capabilities.

Latitude: An open-source platform for both AI agents and prompt engineering.

Pezzo: A developer-first LLMOps platform focused on cloud-native delivery.

Agenta

Agenta is an open-source LLMOps platform that covers the entire development lifecycle. It provides tools for prompt management, evaluation, and observability. Agenta’s main goal is to enable seamless collaboration between developers and non-developers.

Key Features

Git-like Versioning: Agenta uses a versioning model similar to Git. You can create multiple variants (branches) of a prompt, each with its own commit history. This allows for parallel experimentation without affecting production.

Environments: You can deploy different prompt variants to different environments, such as development, staging, and production. This provides a safe and organized way to manage deployments.

Collaboration First: Agenta is designed for collaboration. Subject matter experts can use the UI to edit prompts, run evaluations, and even deploy changes without writing any code. This empowers the entire team to contribute to the quality of the LLM application.

Full Lifecycle Support: Agenta is not just a prompt management tool. It provides a complete LLMOps solution with integrated evaluation and observability. This means you can manage your prompts, test them systematically, and monitor their performance in production, all in one place.

Permissive License: Agenta is licensed under the MIT license. This means you can use it, modify it, and even sell it without any restrictions.

How it Works

Agenta’s Python SDK allows you to manage prompts programmatically. Here is an example of how to create a new prompt variant, deploy it, and fetch it in your application.

import agenta as ag from pydantic import BaseModel # Define the prompt configuration class Config(BaseModel): prompt: ag.PromptTemplate config = Config( prompt=ag.PromptTemplate( messages=[ ag.Message(role="system", content="You are a helpful assistant."), ag.Message(role="user", content="Explain {{topic}} in simple terms."), ], llm_config=ag.ModelConfig( model="gpt-3.5-turbo", temperature=0.7, ) ) ) # Create a new variant variant = ag.VariantManager.create( parameters=config.model_dump(), app_slug="my-app", variant_slug="new-variant" ) # Deploy the variant to production ag.DeploymentManager.deploy( app_slug="my-app", variant_slug="new-variant", environment_slug="production", ) # Fetch the configuration from the registry prod_config = ag.ConfigManager.get_from_registry( app_slug="my-app", environment_slug="production" )

Langfuse

Langfuse is an open-source LLM engineering platform. It provides tools for tracing, prompt management, and evaluations. Langfuse has a strong focus on observability and developer experience.

Key Features

Simple Versioning: Langfuse uses a linear versioning system. Each prompt has a name and a version number. You can use labels like "production" to manage deployments.

UI for Editing: Langfuse provides a user interface for editing and managing prompts. This decouples prompts from the application code.

Strong Observability: Langfuse’s main strength is its tracing and observability features. It provides detailed insights into the performance and cost of your LLM application.

MIT License: Langfuse is also licensed under the MIT license, making it a great choice for commercial projects.

How it Works

Langfuse provides a Python SDK to interact with prompts. Here is how you can create and use a prompt.

from langfuse import Langfuse langfuse = Langfuse() # Create a new prompt prompt = langfuse.create_prompt( name="my-prompt", prompt="Translate the following to French: {{text}}", is_active=True ) # Get the production version of the prompt prod_prompt = langfuse.get_prompt("my-prompt", version="production")

Arize Phoenix

Phoenix is an AI observability platform from Arize. It provides tools for tracing, evaluation, and prompt engineering. Phoenix is built on OpenTelemetry, which makes it highly extensible.

Key Features

Prompt Learning: Phoenix has a unique feature called Prompt Learning that automatically optimizes prompts based on performance.

Strong Evaluation: Phoenix provides powerful tools for evaluating prompts, including LLM-based evaluations and human feedback.

OpenTelemetry Native: Phoenix is built on OpenTelemetry, which is a standard for observability.

How it Works

Phoenix uses a Python SDK for interacting with prompts. The workflow is focused on evaluation and experimentation.

import phoenix as px # Log a prompt and its response with px.trace.span("my-prompt") as span: # Your LLM call here response = llm.complete(prompt="...", temperature=0.7) span.set_attribute("llm.response", response)

Important Note on Licensing: Arize Phoenix is licensed under the Elastic License 2.0. This is a non-copyleft license that is not approved by the Open Source Initiative (OSI). It has restrictions on providing the software as a managed service. This makes it less flexible than MIT or Apache 2.0 licensed alternatives.

Latitude

Latitude is an open-source platform for both AI agents and prompt engineering. It has a broader scope than just prompt management, with a strong focus on building and deploying AI agents.

Key Features

Agent-Centric: Latitude is designed for building AI agents, not just managing prompts. It provides tools for creating complex workflows and integrating with external tools.

Extensive Integrations: Latitude has over 2,500 integrations with other tools and services.

PromptL: Latitude has its own custom language for defining prompts called PromptL.

How it Works

Latitude provides a TypeScript SDK for running prompts.

import { Latitude } from '@latitude-data/sdk' const sdk = new Latitude(process.env.LATITUDE_API_KEY, { projectId: Number(process.env.PROJECT_ID), versionUuid: 'live', }) const result = await sdk.prompts.run('my-prompt', { parameters: { product_name: 'Laptop' }, })

Important Note on Licensing: Latitude is licensed under the LGPL-3.0. This is a copyleft license, which means that if you modify the source code, you must make your changes available under the same license. This can be a problem for commercial projects.

Pezzo

Pezzo is a developer-first, cloud-native LLMOps platform. It focuses on streamlining prompt design, version management, and instant delivery.

Key Features

Cloud-Native: Pezzo is designed to be deployed in a cloud-native environment.

Cost Optimization: Pezzo claims to save up to 90% on costs and latency through caching and other optimizations.

Apache 2.0 License: Pezzo is licensed under the Apache 2.0 license, which is a permissive open-source license.

How it Works

Pezzo provides a Node.js/TypeScript client for consuming prompts.

import { Pezzo, PezzoOpenAI } from "@pezzo/client"; const pezzo = new Pezzo({ apiKey: "<Your Pezzo API key>", projectId: "<Your Pezzo project ID>", environment: "Production", }); const openai = new PezzoOpenAI(pezzo); const prompt = await pezzo.getPrompt("MyPrompt"); const response = await openai.chat.completions.create(prompt, { variables: { topic: "AI" }, });

Comparison

Feature | Agenta | Langfuse | Arize Phoenix | Latitude | Pezzo |

|---|---|---|---|---|---|

Primary Focus | Collaboration & Full Lifecycle | Observability & DevEx | AI Observability & Evaluation | AI Agents & Prompt Engineering | Cloud-Native Delivery & Cost |

Versioning | Git-like (Variants & Commits) | Linear (Versions & Labels) | Linear (Tags) | Version Control | Commit & Publish |

Collaboration | Excellent (UI for non-devs) | Good (UI for editing) | Fair (Developer-focused) | Good (Team workflows) | Fair (Developer-first) |

Evaluation | Integrated (Auto, Human, Online) | Integrated (LLM-as-judge) | Excellent (Prompt Learning) | Integrated (Built-in, HITL) | Limited |

License | MIT | MIT | Elastic License 2.0 (Non-OSI) | LGPL-3.0 (Copyleft) | Apache 2.0 |

Why Agenta is the Best Choice

While all these platforms offer valuable features, Agenta stands out for several reasons:

True Open-Source and Permissive: Agenta is licensed under the MIT license, which gives you complete freedom. You can use it, modify it, and build commercial products on top of it without any restrictions. This is a major advantage over Arize Phoenix and Latitude, which have more restrictive licenses.

Designed for the Whole Team: Agenta is the only platform that is explicitly designed for collaboration between developers and non-developers. The user-friendly UI empowers product managers and subject matter experts to contribute directly to the quality of the LLM application. This leads to faster iteration cycles and better results.

Complete LLMOps Lifecycle: Agenta provides an integrated solution for the entire LLM development lifecycle. You do not need to stitch together multiple tools for prompt management, evaluation, and observability. This simplifies your workflow and reduces complexity.

Flexible and Powerful Versioning: Agenta’s Git-like versioning system is both powerful and flexible. It allows you to manage complex experiments and deployments with ease.

Conclusion

Choosing the right prompt management platform is crucial for building reliable LLM applications. While each platform has its strengths, Agenta offers a unique combination of features that make it the best choice for most teams. Its focus on collaboration, its complete LLMOps lifecycle support, and its permissive MIT license make it a powerful and flexible solution.

Ready to take control of your prompt management? Check out our Quick Start guide or get started with Agenta today.

References

More from the Blog

More from the Blog

More from the Blog

The latest updates and insights from Agenta

The latest updates and insights from Agenta

The latest updates and insights from Agenta

Ship reliable agents faster with Agenta

Build reliable LLM apps together with integrated prompt

management, evaluation, and observability.

Ship reliable agents faster with Agenta

Build reliable LLM apps together with integrated prompt

management, evaluation, and observability.

Ship reliable agents faster with Agenta

Build reliable LLM apps together with integrated prompt

management, evaluation, and observability.

Copyright © 2020 - 2060 Agentatech UG

Copyright © 2020 - 2060 Agentatech UG

Copyright © 2020 - 2060 Agentatech UG